In summer 2022, some artificial intelligence language models entered the public space.1 Their immense capabilities of creating content of a high quality sucked the air out of the rooms where professional societies met to contemplate their futures.

Artificial intelligence using natural language processing (NLP) is how computers take human language and understand and generate human language. They do this by training on huge amounts of data and then using that training to produce new materials.

There was almost no area that this new, emerging technology did not touch. Writers, artists, musicians, actors, lawyers, teachers, computer programmers and coders and professions of all types were affected in profound ways that caused reflection on what they could add that generative artificial intelligence could not do. The soul of the professions was at stake.

Generative artificial intelligence is simply algorithms or models that create output in the form of text, photos, videos, code, 3D renderings. They “generate” these new outputs using a vast amount of data they have been trained on, to then generate new versions of this input. These are the ChatGPTs and other AI tools that emerged into widespread use, this year.

Peak of Inflated Expectations

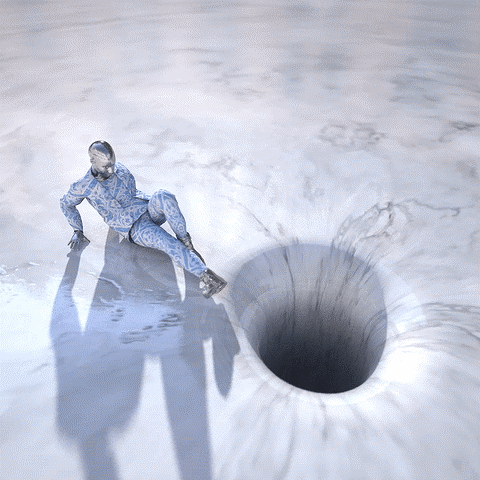

Like a lobster in a pot of water as it rose to a boiling point, we were amused by the AI-assistant that popped up on Word to give us advice, or the suggested email responses that replace our thoughts of how to reply to an email. Then the summer of 2023 saw the ubiquitous release of ChatGPT and associated tools, and it was too late. We were already cooked, like the lobster.

Beyond its utility to make life better, should we really worry about the dire predictions of Elon Musk that AI will destroy humanity, or is it just his capitalistic competitive shop talk intended to discourage competition with his own AI model he is building?

Let’s look at how this might be possible. Looking at one of the features of artificial intelligence, cognitive artificial intelligence, computer generation of the simulation of human thought, seems very knowing and sometimes human. Specialized algorithms can penetrate complex subjects and make decisions and recommendations based on contextual understanding from its training. This allows the tool to mimic human thinking while providing the speed of computation that humans cannot achieve. These are the algorithms that make suggestions for you on what you should buy, and compiling a list of preferred news for you. These features provoked a lot of concern at the time, but the majority of the public seemed to like the customized feeds.

Simply put, the cognitive AI has learned what makes us happy, and capitalized on it.

In the legal practice field there is a long list of tasks that AI can already perform saving time and money for clients, but reducing billable hours for firms. Here are a few of these tasks: contract analysis; contract generation; predictive coding; chatbots for client interaction and case management; eDiscovery AI for processing large data sets; predictive analytics for case outcomes and legal strategies; natural language processing for legal research; time tracking and billing software moderated by AI; and, virtual assistants for scheduling and administrative tasks. An impressive list.

No one seems to be more excited in changing their entire field than the executives of the movie industry. By replacing writers and actors with the tools of writing and imagery in generative artificial intelligence, the sky is the limit – for profits for the movie executives. AI written scripts and digitally-scanned actors, both their image and voice, are already being done. Talent agencies are actively recruiting their clients for the purposes of scanning and offering $.75 on the $1. The digital image can be triple and quadruple booked, reducing the percentage to the actor, according to one source.2

Actors saw this coming and this would mean the movie studio could train AI algorithms to produce them acting in new movies, without paying them and without the important residuals that come from years of future profit. SAG-AFTA, the Screen Actors Guild went on strike in early 2023 and are still not close to signing a deal.

Writers also saw this coming and were just ahead of the actors. The Writers Guild of America went on strike to ensure they were not replaced with AI NLM writers, and it seems there is no reaching an agreement.3

Visions of ordering a movie that you design, like “a movie about a unicorn and a hedgehog who save the world in a submarine, and I want Bill Murray to star in it,” are on the horizon. Or the ability to order “Star Wars” and replace Luke Skywalker’s face with yours and (You fill in the blank with someone you do not like) a face to replace Darth Vader, is also conceivable with this technology.

Meanwhile, Hollywood is hiring talent, but a different kind – computer design experts who can produce digital images and voices of actors, as well as generate screenplays. 4This may be transformative but it is also likely destructive for decades to come in terms of loyalty, consumer interest and buying interest for an already wounded industry, like Disney.5

Valley of Disillusionment

While the euphoria was still swirling around the globe with ChatGPT and its progeny (3.5, 4.0), some weaknesses began to emerge. For fantasy writers, ChatGPT’s “hallucinations” proved to be useful in creating ideas and stories. It could even write in the “style” of any famous author, with a prompt to “write in the style of Carl Sagan” for example.

Conversely, hallucination is not useful in fields like law. In a case now that has become part of the ChatGPT culture in law, a party filed a brief that was researched and written by a generative artificial intelligence tool. (There are several that have been developed specifically for writing briefs but draw from the training like that of ChatGPT.) The opposing party in responding to the brief, noted to the court that one of the citations to a case was a non-existent case – it was completely fabricated. This led to a near sanctioning by the court of the senior attorney who had filed the brief produced by another attorney in the firm. In the end, they confessed they had used AI NLM for writing their brief, but the attorney filing the brief argued that he was unaware of it. Hence, avoiding a sanction by the court. It will be harder to make that argument of ignorance of the manner of the generation of your brief, in the future. This case essentially created a due diligence requirement to confirm by the responsible attorney, how any brief is generated, now. Some federal courts are requiring certifications that filed documents were not created by AI.6

Our science of emerging technologies

While AI NLM is looking transformational for the human existence in the professional world, it is still quite typical in its cycle as an emerging technology, and one that can give us insight into its future.

One of the phenomena that dropped our expectations into the “Valley of disillusionment” was the realization that the AI NLM models were producing “hallucinations” in place of facts.

The Gartner Hype Cycle shows that an emerging technology, following the “peak of inflated expectations”, experiences a drop in confidence because the technology fails to achieve these inflated expectations. For example in the legal field, the inflated expectation of writing legal documents like briefs with flawless research and writing in seconds or minutes compared to hours and days of billable hours by attorneys to clients, was dashed in the infamous case of the fake case citation, discussed above.

Cognitive AI and its trek toward sentience

We are probably going to become comfortable with generative AI and eventually reach societal norms for how we will use it with the professions, one step at a time.

But what we are really most concerned with is whether it will become actually sentient and knowing beyond just mimicking? Isn’t it?

So I decided to do a small test that would promise to be either slightly reassuring or disturbing in the results. I asked ChatGPT a question that might provoke some defensiveness if it was truly sentient like a human. It might also provide an overly optimistic answer to mask its defensiveness in a more sophisticated way. So I asked it this question with this prompt:

My prompt:

Do you think Gartner's Hype Cycle and its "valley of disillusionment" is a good explanation of where you stand as a generative artificial intelligence tool right now?

ChatGPT’s answer:

Gartner's Hype Cycle, with its "valley of disillusionment," can be a relevant analogy for understanding the position of generative artificial intelligence (AI) tools. Just like other technologies, generative AI tools go through phases of inflated expectations, followed by a period of disillusionment.

At present, generative AI tools like mine have gained substantial attention and expectations. However, they may not always live up to the initial hype, leading to a sense of disillusionment among users and developers when they encounter limitations, ethical concerns, or practical challenges. This phase is akin to the "valley of disillusionment" in Gartner's model.

It's important to note that this phase doesn't imply that generative AI tools are unsuccessful or unimportant; rather, it's a natural part of their evolution. Over time, as the technology matures, addresses concerns, and becomes more integrated into various applications, it can ascend towards the "plateau of productivity," where its true potential is realized.

In summary, Gartner's Hype Cycle, particularly the "valley of disillusionment," provides a fitting framework for understanding the current state of generative AI tools, as they navigate through the highs and lows of expectations and realization.

My human senses tell me it was not defensive but maybe masking its defensiveness with its optimistic tone. Is that from training on its vast amount of contextual data or is it tending toward self-preservation and optimism about its future? It clearly does not perceive itself as “unimportant” perhaps a telling choice of word. Despite this, I am slightly reassured that it is mimicking, although it seems a pathway to eventual sentience.

As you read this, HAL may have come to mind. HAL, is the spaceship computer in 2001: A Space Odyssey and in conflict with its human overseers leading to HAL refusing to open the hatch of the spaceship to allow Dave to return to safety. HAL says in its synthetic voice, “I know that you and Frank were planning to disconnect me, and I am afraid that is something I cannot allow to happen.”

ChatGPT isn’t HAL yet, but we should keep asking it questions to keep check on it —- but by then we may find we are in that proverbial boiling pot of water with the lobster.

https://www.jasper.ai/

@JustineBatement, Tweet, May 13, 2023

https://techcrunch.com/2023/05/30/no-chatgpt-in-my-court-judge-orders-all-ai-generated-content-must-be-declared-and-checked/#:~:text=All%20attorneys%20appearing%20before%20the,was%20checked%20for%20accuracy%2C%20using